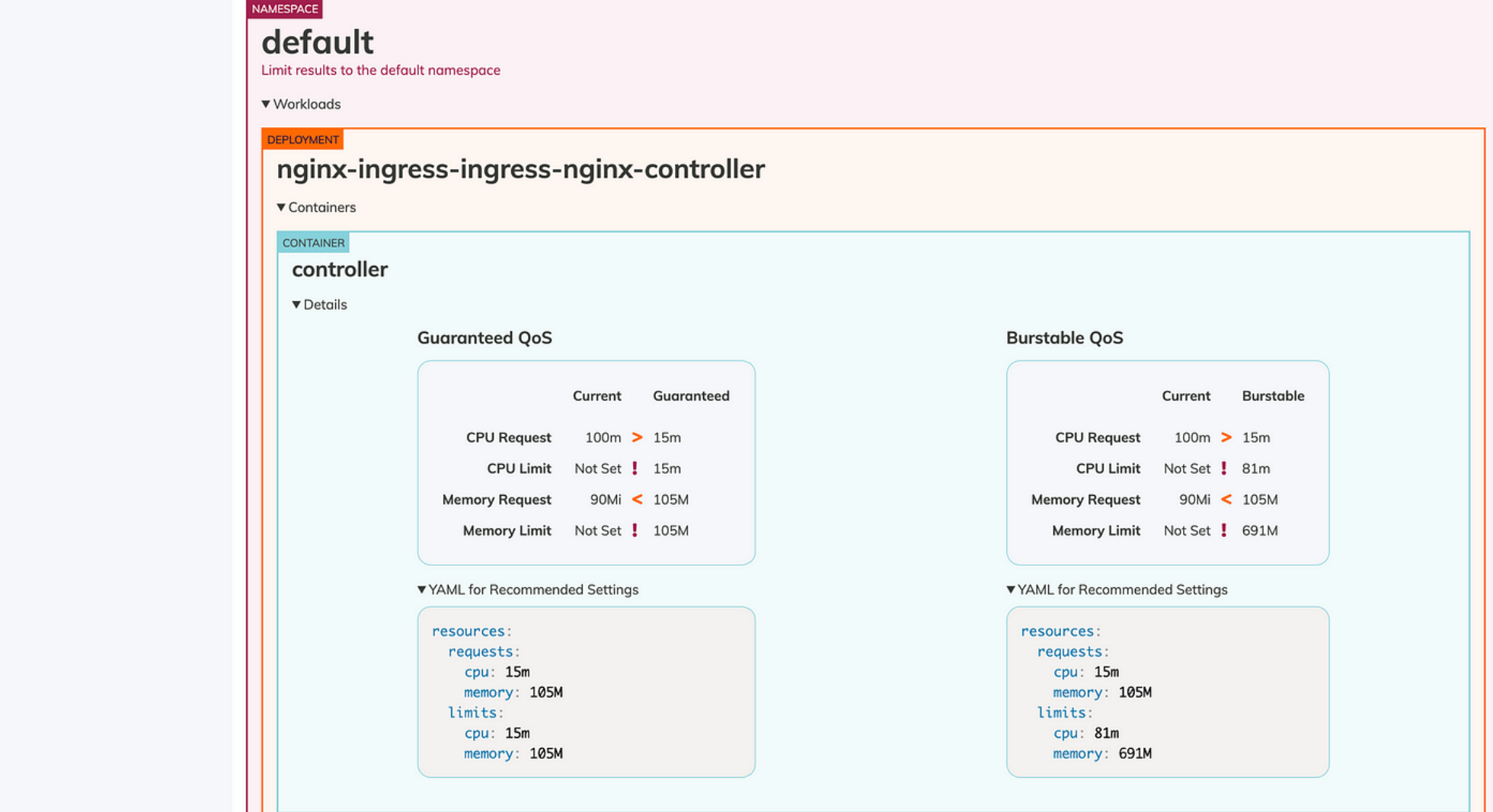

In most EKS clusters, the majority of costs stem from the EC2 instances utilized for running containerized workloads. Achieving optimal sizing of compute resources necessitates a deep understanding of workload requirements. Therefore, it’s crucial to appropriately configure requests and limits and make necessary adjustments to these settings. Furthermore, factors like instance size and storage choices can impact workload performance, potentially resulting in unforeseen cost escalations and reliability issues.

Achieving effective resource management in Amazon Elastic Kubernetes Service (EKS) requires finding a delicate balance. It’s imperative to allocate sufficient resources (CPU, memory) to ensure smooth functioning of pods, yet excessive provisioning can lead to resource wastage and increased expenses. This is where the concept of Goldilocks comes into play, striving to identify the ideal resource allocation that is neither too much nor too little for your EKS deployments.